This blog was updated on a semi-daily basis by Joey during the year of work concentrating on the git-annex assistant that was funded by his kickstarter campaign.

Post-kickstarter work will instead appear on the devblog. However, this page's RSS feed will continue to work, so you don't have to migrate your RSS reader.

Yesterday I cut another release. However, getting an OSX build took until 12:12 pm today because of a confusion about the location of lsof on OSX. The OSX build is now available, and I'm looking forward to hearing if it's working!

Today I've been working on making git annex sync commit in direct mode.

For this I needed to find all new, modified, and deleted files, and I also

need the git SHA from the index for all non-new files. There's not really

an ideal git command to use to query this. For now I'm using

git ls-files --others --stage, which works but lists more files than I

really need to look at. It might be worth using one of the Haskell libraries

that can directly read git's index.. but for now I'll stick with ls-files.

It has to check all direct mode files whose content is present, which means

one stat per file (on top of the stat that git already does), as well as one

retrieval of the key per file (using the single git cat-file process that

git-annex talks to).

This is about as efficient as I can make it, except that unmodified

annexed files whose content is not present are listed due to --stage,

and so it has to stat those too, and currently also feeds them into git add.

The assistant will be able to avoid all this work, except once at startup.

Anyway, direct mode committing is working!

For now, git annex sync in direct mode also adds new files. This because

git annex add doesn't work yet in direct mode.

It's possible for a direct mode file to be changed during a commit, which would be a problem since committing involves things like calculating the key and caching the mtime/etc, that would be screwed up. I took care to handle that case; it checks the mtime/etc cache before and after generating a key for the file, and if it detects the file has changed, avoids committing anything. It could retry, but if the file is a VM disk image or something else that's constantly modified, commit retrying forever would not be good.

For git annex sync to be usable in direct mode, it still needs

to handle merging. It looks like I may be able to just enhance the automatic

conflict resolution code to know about typechanged direct mode files.

The other missing piece before this can really be used is that currently

the key to file mapping is only maintained for files added locally, or

that come in via git annex sync. Something needs to set up that mapping

for files present when the repo is initally cloned. Maybe the thing

to do is to have a git annex directmode command that enables/disables

direct mode and can setup the the mapping, as well as any necessary unlocks

and setting the trust level to untrusted.

Spent a while tracking down a bug that causes a crash on OSX when setting up an XMPP account. I managed to find a small test case that reliably crashes, and sent it off to the author of the haskell-gnutls bindings, which had one similar segfault bug fixed before with a similar test case. Fingers crossed..

Just finished tracking down a bug in the Android app that caused its terminal to spin and consume most CPU (and presumably a lot of battery). I introduced this bug when adding the code to open urls written to a fifo, due to misunderstanding how java objects are created, basically. This bug is bad enough to do a semi-immediate release for; luckily it's just about time for a release anyway with other improvements, so in the next few days..

Have not managed to get a recent ghc-android to build so far.

Guilhem fixed some bugs in git annex unused.

Today was a day off, really. However, I have a job running to try to build get a version of ghc-android that works on newer Android releases.

Also, guilhem's git annex unused speedup patch landed. The results are

extrordinary -- speedups on the order of 50 to 100 times faster should

not be uncommon. Best of all (for me), it still runs in constant memory!

After a couple days plowing through it, my backlog is down to 30 messages from 150. And most of what's left is legitimate bugs and todo items.

Spent a while today on an ugly file descriptor leak in the assistant's local pairing listener. This was an upstream bug in the network-multicast library, so while I've written a patch to fix it, the fix isn't quite deployed yet. The file descriptor leak happens when the assistant is running and there is no network interface that supports multicast. I was able to reproduce it by just disconnecting from wifi.

Meanwhile, guilhem has been working on patches that promise to massively

speed up git annex unused! I will be reviewing them tonight.

Made some good progress on the backlog today. Fixed some bugs, applied some patches. Noticing that without me around, things still get followed up on, to a point, for example incomplete test cases for bugs get corrected so they work. This is a very good thing. Community!

I had to stop going through the backlog when I got to one message from

Anarcat mentioning quvi. That turns

out to be just what is needed to implement the often-requested feature

of git-annex addurl supporting YouTube and other similar sites. So I

spent the rest of the day making that work. For example:

% git annex addurl --fast 'http://www.youtube.com/watch?v=1mxPFHBCfuU&list=PL4F80C7D2DC8D9B6C&index=1' addurl Star_Wars_X_Wing__Seth_Green__Clare_Grant__and_Mike_Lamond_Join_Wil_on_TableTop_SE2E09.webm ok

Yes, that got the video title and used it as the filename, and yes,

I can commit this file and run git annex get later, and it will be

able to go download the video! I can even use git annex fsck --fast

to make sure YouTube still has my videos. Awesome.

The great thing about quvi is it takes the url to a video webpage, and returns an url that can be used to download the actual video file. So it simplifies ugly flash videos as far out of existence as is possible. However, since the direct url to the video file may not keep working for long. addurl actually records the page's url, with an added indication that quvi should be used to get it.

Back home. I have some 170 messages of backlog to attend to. Rather than digging into that on my first day back, I spent some time implementing some new features.

git annex import has grown three options that help managing importing of

duplicate files in different ways. I started work on that last week, but

didn't have time to find a way to avoid the --deduplicate option

checksumming each imported file twice. Unfortunately, I have still not

found a way I'm happy with, so it works but is not as efficient as it could

be.

git annex mirror is a new command suggested to me by someone at DebConf

(they don't seem to have filed the requested todo). It arranges for two

repositories to contain the same set of files, as much as possible (when

numcopies allows). So for example, git annex mirror --to otherdrive

will make the otherdrive remote have the same files present and not present

as the local repository.

I am thinking about expanding git annex sync with an option to also sync

data. I know some find it confusing that it only syncs the git metadata

and not the file contents. That still seems to me to be the best and most

flexible behavior, and not one I want to change in any case since

it would be most unexpected if git annex sync downloaded a lot of stuff

you don't want. But I can see making git annex sync --data download

all the file contents it can, as well as uploading all available file

contents to each remote it syncs with. And git annex sync --data --auto

limit that to only the preferred content. Although perhaps

these command lines are too long to be usable?

With the campaign more or less over, I only have a little over a week before it's time to dive into the first big item on the roadmap. Hope to be through the backlog by then.

Wow, 11 days off! I was busy with first dentistry and then DebConf.

Yesterday I visited CERN and

got to talk with some of their IT guys about how they manage their tens of

petabytes of data. Interested to hear they also have the equivilant of a

per-subdirectory annex.numcopies setting. OTOH, they have half a billion

more files than git's index file is likely to be able to scale to support.

Pushed a release out today despite not having many queued changes. Also, I got git-annex migrated to Debian testing, and so was also able to update the wheezy backport to a just 2 week old version.

Today is also the last day of the campaign!

There has been a ton of discussion about git-annex here at DebConf, including 3 BoF sessions that mostly focused on it, among other git stuff. Also, RichiH will be presenting his "Gitify Your Life" talk on Friday; you can catch it on the live stream.

I've also had a continual stream of in-person bug and feature requests. (Mostly features.) These have been added to the wiki and I look forward to working on that backlog when I get home.

As for coding, I am doing little here, but I do have a branch cooking that

adds some options to git annex import to control handling of duplicate

files.

Made two big improvements to the Windows port, in just a few hours. First, got gpg working, and encrypted special remotes work on Windows. Next, fixed a permissions problem that was breaking removing files from directory special remotes on Windows. (Also cleaned up a lot of compiler warnings on Windows.)

I think I'm almost ready to move the Windows port from alpha to beta status. The only really bad problem that I know of with using it is that due to a lack of locking, it's not safe to run multiple git-annex commands at the same time in Windows.

Got the release out, with rather a lot of fiddling to fix broken builds on various platforms.

Also released a backport to Debian stable. This backport has the assistant, although without WebDAV support. Unfortunately it's an old version from May, since ghc transitions and issues have kept newer versions out of testing so far. Hope that will clear up soon (probably by dropping haskell support for s390x), and I can update it to a newer version. If nothing else it allows using direct mode with Debian stable.

Pleased that the git-cat-files bug was quickly fixed by Peff and has already been pulled into Junio's release tree!

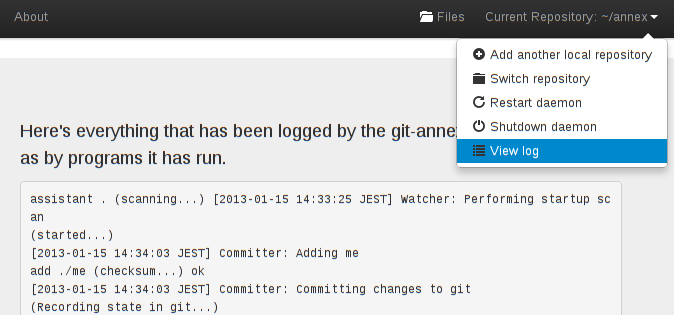

This evening, I've added an interface around the new improved

git check-ignore in git 1.8.4. The assistant can finally honor .gitignore

files!

Today was a nice reminder that there are no end of bugs lurking in filename handling code.

First, fixed a bug that prevented git-annex from adding filenames starting with ":", because that is a special character to git.

Second, discovered that git 1.8.4 rc0 has changed git-cat-file --batch in

a way that makes it impossible to operate on filenames containing spaces.

This is, IMHO, a reversion, so hopefully my

bug report will get it fixed.

Put in a workaround for that, although using the broken version of git with a direct mode repository with lots of spaces in file or directory names is going to really slow down git-annex, since it often has to fork a new git cat-file process for each file.

Release day tomorrow..

Turns out ssh-agent is the cause of the unknown UUID bug! I got a tip

about this from a user, and was quickly able to reproduce the bug that had

eluded me so long. Anyone who has run ssh-add and is using ssh-agent

would see the bug.

It was easy enough to fix as it turns out. Just need to set IdentitiesOnly in .ssh/config where git-annex has set up its own IdentityFile to ensure that its special purpose ssh key is used rather than whatever key the ssh-agent has loaded into it. I do wonder why ssh behaves this way -- why would I set an IdentityFile for a host if I didn't want ssh to use it?

Spent the rest of the day cleaning up after the bug. Since this affects so many people, I automated the clean up process. The webapp will detect repositories with this problem, and the user just has to click to clean up. It'll then correct their .ssh/config and re-enable the repository.

Back to bug squashing. Fixed several, including a long-standing problem on OSX that made the app icon seem to "bounce" or not work. Followed up on a bunch more.

The 4.20130723 git-annex release turns out to have broken support for

running on crippled filesystems (Android, Windows). git annex sync will

add dummy symlinks to the annex as if they were regular files, which is

not good!

Recovery instructions

I've updated the Android and Windows builds and recommend an immediate upgrade.

Will make a formal release on Friday.

Spent some time improving the test suite on Windows, to catch this bug,

and fix a bug that was preventing it from testing git annex sync on

Windows.

I am getting very frustrated with this "unknown UUID" problem that a dozen

people have reported. So far nobody has given me enough information to

reproduce the problem. It seems to have something to do with

git-annex-shell not being found on the remote system that has been either

local paired with or is being used as a ssh server, but I don't yet

understand what. I have spent hours today trying various scenarios to break

git-annex and get this problem to happen.

I certainly can improve the webapp's behavior when a repository's UUID is not known. The easiest fix would be to simply not display such repositories. Or there could be a UI to try to get the UUID. But I'm more interested in fixing the core problem than putting in a UI bandaid.

Technically offtopic, but did a fun side project today: http://joeyh.name/blog/entry/git-annex_as_a_podcatcher/

Worked on 3 interesting bugs today. One I noticed myself while doing tests with adding many thousands of files yesterday. Assistant was delaying making a last commit of the batch of files, and would only wake up and commit them after a further change was made. Turns out this bug was introduced in April while improving commit batching when making very large commits. I seem to remember someone mentioning this problem at some point, but I have not been able to find a bug report to close.

Also tried to reproduce ?this bug. Frustrating, because I'm quite sure I have made changes that will avoid it happening again, but since I still don't know what the root cause was, I can't let it go.

The last bug is ?non-repos in repositories list (+ other weird output) from git annex status and is a most strange thing. Still trying to get a handle on multiple aspects of it.

Also various other bug triage. Down to only 10 messages in my git-annex folder. That included merging about a dozen bugs about local pairing, that all seem to involve git-annex-shell not being found in path. Something is up with that..

The big news: Important behavior change in git annex dropunused. Now it

checks, just like git annex drop, that it's not dropping the last copy of

the file. So to lose data, you have to use --force. This continues the

recent theme of making git-annex hold on more tenaciously to old data, and

AFAIK it was the last place data could be removed without --force.

Also a nice little fix to git annex unused so it doesn't identify

temporary files as unused if they're being used to download a file.

Fixing it was easy thanks to all the transfer logs and locking

infrastucture built for the assistant.

Fixed a bug in the assistant where even though syncing to a network remote was disabled, it would still sync with it every hour, or whenever a network connection was detected.

Working on some direct mode scalability problems when thousands of the identical files are added. Fixing this may involvie replacing the current simple map files with something more scalable like a sqllite database.

While tracking that down, I also found a bug with adding a ton of files in indirect mode, that could make the assistant stall. Turned out to be a laziness problem. (Worst kind of Haskell bug.) Fixed.

Today's sponsor is my sister, Anna Hess, who incidentially just put the manuscript of her latest ebook in the family's annex prior to its publication on Amazon this weekend.

Seems I forgot why I was using debian stable chroots to make the autobuilds: Lots of people still using old glibc version. Had to rebuild the stable chroots that I had upgraded to unstable. Wasted several hours.. I was able to catch up on recent traffic in between.

Was able to reproduce a bug where git annex initremote hung with some

encrypted special remotes. Turns out to be a deadlock when it's not built

with the threaded GHC runtime. So I've forced that runtime to be used.

Got the release out.

I've been working on fleshing out the timeline for the next year. Including a fairly detailed set of things I want to do around disaster recovery in the assistant.

No release today after all. Unexpected bandwidth failure. Maybe in a few days..

Got unannex and uninit working in direct mode. This is one of the more subtle parts of git-annex, and took some doing to get it right. Surprisingly, unannex in direct mode actually turns out to be faster than in indirect mode. In direct mode it doesn't have to immediately commit the unannexing, it can just stage it to be committed later.

Also worked on the ssh connection caching code. The perrennial problem with that code is that the fifo used to communicate with ssh has a small limit on its path, somewhere around 100 characters. This had caused problems when the hostname was rather long. I found a way to avoid needing to be able to reverse back from the fifo name to the hostname, and this let me take the md5sum of long hostnames, and use that shorter string for the fifo.

Also various other bug followups.

Campaign is almost to 1 year!

git-annex has a new nicer versions of its logo, thanks to John Lawrence.

Finally tracked down a week-old bug about the watcher crashing. It turned out to crash when it encountered a directory containing a character that's invalid in the current locale. I've noticed that 'ü' is often the character I get bug reports about. After reproducing the bug I quickly tracked it down to code in the haskell hinotify library, and sent in a patch.

Also uploaded a fixed hinotify to Debian, and deployed it to all 3 of the autobuilder chroots. That took much more time than actually fixing the bug. Quite a lot of yak shaving went on actually. Oh well. The Linux autobuilders are updated to use Debian unstable again, which is nice.

Fixed a bug that prevented annex.diskreserve to be honored when storing files encrypted in a directory special remote.

Taught the webapp the difference between initializing a new special remote and enabling an existing special remote, which fixed some bad behavior when it got confused.

And then for the really fun bug of the day! A user sent me a large file which badly breaks git annex add. Adding the file causes a symlink to be set up, but the file's content is not stored in the annex. Indeed, it's deleted. This is the first data loss bug since January 2012.

Turns out it was caused by the code that handles the dummy files git uses

in place of symlinks on FAT etc filesystems. Code that had no business

running when core.symlinks=true. Code that was prone to false positives

when looking at a tarball of a git-annex repository. So I put in multiple

fixes for this bug. I'll be making a release on Monday.

Today's work was sponsored by Mikhail Barabanov. Thanks, Mikhail!

Succeeded fixing a few bugs today, and followed up on a lot of other ones..

Fixed checking when content is present in a non-bare repository accessed via http.

My changes a few days ago turned out to make uninit leave hard links behind in .git/annex. Luckily the test suite caught this bug, and it was easily fixed by making uninit delete objects with 2 or more hard links at the end.

Theme today seems to be fun with exceptions.

Fixed an uncaught exception that could crash the assistant's Watcher thread if just the right race occurred.

Also fixed it to not throw an exception if another process is

already transferring a file. What this means is that if you run multiple

git annex get processes on the same files, they'll cooperate in each

picking their own files to get and download in parallel. (Also works for

copy, etc.) Especially useful when downloading from an encrypted remote,

since often one process will be decrypting a file while the other is

downloading the next file. There is still room for improvement here;

a -jN option could better handle ensuring N downloads ran concurrently, and

decouple decryption from downloading. But it would need the output layer to

be redone to avoid scrambled output. (All the other stuff to make parallel

git-annex transfers etc work was already in place for a long time.)

Campaign update: Now funded for nearly 10 months, and aiming for a year. https://campaign.joeyh.name/

It looks like I'm funded for at least the next 9 months! It would still be

nice to get to a year.  https://campaign.joeyh.name/

https://campaign.joeyh.name/

Working to get caught up on recent bug reports..

Made git annex uninit not nuke anything that's left over in

.git/annex/objects after unannexing all the files. After all, that could

be important old versions of files or deleted file, and just because the

user wants to stop using git-annex, doesn't mean git-annex shouldn't try to

protect that data with its dying breath. So it prints out some suggestions

in this case, and leaves it up to the user to decide what to do with the

data.

Fixed the Android autobuilder, which had stopped including the webapp.

Looks like another autobuilder will be needed for OSX 10.9.

Surprise! I'm running a new crowdfunding campaign, which I hope will fund several more months of git-annex development.

Please don't feel you have to give, but if you do decide to, give

generously.  I'm accepting both Paypal and Bitcoin (via CoinBase.com),

and have some rewards that you might enjoy.

I'm accepting both Paypal and Bitcoin (via CoinBase.com),

and have some rewards that you might enjoy.

I came up with two lists of things I hope this campaign will fund. These are by no means complete lists. First, some general features and development things:

- Integrate better with Android.

- Get the assistant and webapp ported to Windows.

- Refine the automated stress testing tools to find and fix more problems before users ever see them.

- Automatic recovery. Cosmic ray flipped a bit in a file? USB drive corrupted itself? The assistant should notice these problems, and fix them.

- Encourage more contributions from others. For example, improve the special remote plugin interface so it can do everything the native Haskell interface can do. Eight new cloud storage services were added this year as plugins, but we can do better!

- Use deltas to reduce bandwidth needed to transfer modified versions of files.

Secondly, some things to improve security:

- Add easy support for encrypted git repositories using git-remote-gcrypt, so you can safely push to a repository on a server you don't control.

- Add support for setting up and using GPG keys in the webapp.

- Add protection to the XMPP protocol to guard against man in the middle attacks if the XMPP server is compromised. Ie, Google should not be able to learn about your git-annex repository even if you're using their servers.

- To avoid leaking even the size of your encrypted files to cloud storage providers, add a mode that stores fixed size chunks.

It will also, of course, fund ongoing bugfixing, support, etc.

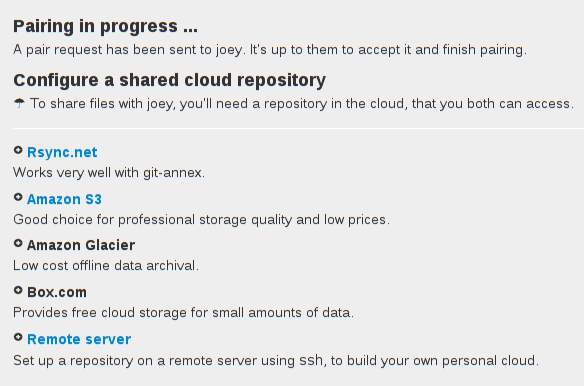

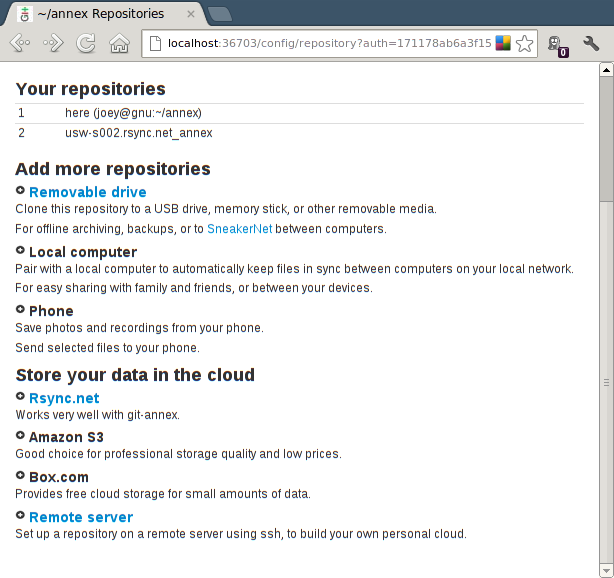

Been keeping several non-coding balls in the air recently, two of which landed today.

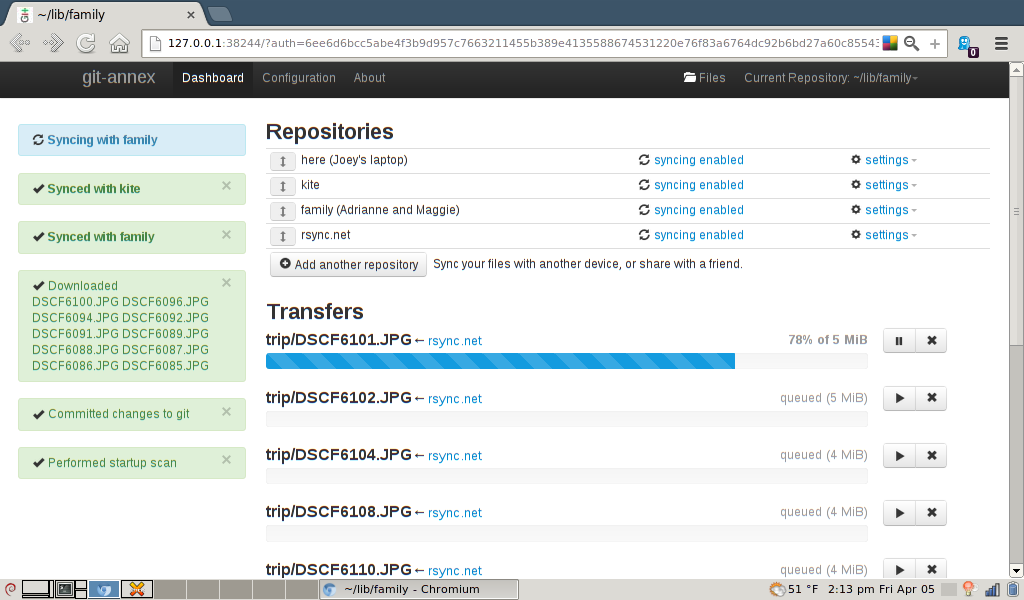

First, Rsync.net is offering a discount to all git-annex users, at one third their normal price. "People using git-annex are clueful and won't be a big support burden for us, so it's a win-win." The web app will be updated offer the discount when setting up a rsync.net repository.

Secondly, I've recorded an interview today for the Git Minutes podcast, about git-annex. Went well, looking forward to it going up, probably on Monday.

Got the release out, after fixing test suite and windows build breakage. This release has all the features on the command line side (--all, --unused, etc), but several bugfixes on the assistant side, and a lot of Windows bug fixes.

I've spent this evening adding icons to git-annex on Linux. Even got the Linux standalone tarball to automatically install icons.

Two gpg fixes today. The OSX Mtn Lion builds were pulling in a build of gpg that wanted a gpg-agent to be installed in /usr/local or it wouldn't work. I had to build my own gpg on OSX to work around this. I am pondering making the OSX dmg builds pull down the gpg source and build their own binary, so issues on the build system can't affect it. But would really rather not, since maintaining your own version of every dependency on every OS is hard (pity about there still being so many OS's without sane package management).

On Android, which I have not needed to touch for a month, gpg was built with --enable-minimal, which turned out to not be necessary and was limiting the encryption algorythms included, and led to interoperability problems for some. Fixed that gpg build too.

Also fixed an ugly bug in the webapp when setting up a rsync repository.

It would configure ~/.ssh/authorized_keys on the server to force

git-annex-shell to be run. Which doesn't work for rsync. I didn't notice

this before because it doesn't affect ssh servers that already have a ssh

setup that allows accessing them w/o a password.

Spent a while working on a bug that can occur in a non-utf8 locale when using special characters in the directory name of a ssh remote. I was able to reproduce it, but have not worked out how to fix it; encoding issues like this are always tricky.

Added something to the walkthrough to help convince people that yes, you can use tags and branches with git-annex just like with regular git. One of those things that is so obvious to the developer writing the docs that it's hard to realize it will be a point of concern.

Seems like there is a release worth of changes already, so I plan to push it out tomorrow.

Actually spread out over several days..

I think I have finally comprehensively dealt with all the wacky system

misconfigurations that can make git commit complain and refuse to commit.

The last of these is a system with a FQDN that doesn't have a dot in it.

I personally think git should just use the hostname as-is in the email

address for commits here -- it's better to be robust. Indeed, I think it

would make more sense if git commit never failed, unless it ran out of

disk or the repo is corrupt. But anyway, git-annex

init will now detect when the commit fails because of this and put a

workaround in place.

Fixed a bug in git annex addurl --pathdepth when the url's path was

shorter than the amount requested to remove from it.

Tracked down a bug that prevents git-annex from working on a system with an old linux kernel. Probably the root cause is that the kernel was built without EVENTFD support. Found a workaround to get a usable git-annex on such a system is to build it without the webapp, since that disables the threaded runtime which triggered the problem.

Dealt with a lot of Windows bugs. Very happy that it's working well enough

that some users are reporting bugs on it in Windows, and with enough detail

that I have not needed to boot Windows to fix them so far.

I've felt for a while that git-annex needed better support for managing the contents of past versions of files that are stored in the annex. I know some people get confused about whether git-annex even supports old versions of files (it does, but you should use indirect mode; direct mode doesn't guarantee old versions of files will be preserved).

So today I've worked on adding command-line power for managing past

versions: a new --all option.

So, if you want to copy every version of every file in your repository to

an archive, you can run git annex copy --all --to archive.

Or if you've got a repository on a drive that's dying, you can run

git annex copy --all --to newdrive, and then on the new drive, run git

annex fsck --all to check all the data.

In a bare repository, --all is default, so you can run git annex get

inside a bare repository and it will try to get every version of every file

that it can from the remotes.

The tricky thing about --all is that since it's operating on objects and

not files, it can't check .gitattributes settings, which are tied to the

file name. I worried for a long time that adding --all would make

annex.numcopies settings in those files not be honored, and that this would

be a Bad Thing. The solution turns out to be simple: I just didn't

implement git annex drop --all! Dropping is the only action that needs to

check numcopies (move can also reduce the number of copies, but explicitly

bypasses numcopies settings).

I also added an --unused option. So if you have a repository that has

been accumulating history, and you'd like to move all file contents not

currently in use to a central server, you can run git annex unused; git

annex move --unused --to origin

Spent too many hours last night tracking down a bug that caused the webapp

to hang when it got built with the new yesod 1.2 release. Much of that time

was spent figuring out that yesod 1.2 was causing the problem. It turned out to

be a stupid typo in my yesod compatability layer. liftH = liftH in

Haskell is an infinite loop, not the stack overflow you get in most

languages.

Even though it's only been a week since the last release, that was worth pushing a release out for, which I've just done. This release is essentially all bug fixes (aside from the automatic ionice and nicing of the daemon).

This website is now available over https. Perhaps more importantly, all the links to download git-annex builds are https by default.

The success stories list is getting really

nice. Only way it could possibly be nicer is if you added your story! Hint.

Came up with a fix for the gnucash hard linked file problem that makes the assistant notice the files gnucash writes. This is not full hard link support; hard linked files still don't cleanly sync around. But new hard links to files are noticed and added, which is enough to support gnucash.

Spent around 4 hours on reproducing and trying to debug ?Hanging on install on Mountain lion. It seems that recent upgrades of the OSX build machine led to this problem. And indeed, building with an older version of Yesod and Warp seems to have worked around the problem. So I updated the OSX build for the last release. I will have to re-install the new Yesod on my laptop and investigate further -- is this an OSX specific problem, or does it affect Linux? Urgh, this is the second hang I've encountered involving Warp..

Got several nice success stories, but I don't

think I've seen yours yet.  Please post!

Please post!

Got caught up on a big queue of messages today. Mostly I hear from people when git-annex is not working for them, or they have a question about using it. From time to time someone does mention that it's working for them.

We have 4 or so machines all synching with each other via the local network thing. I'm always amazed when it doesn't just explode

Due to the nature of git-annex, a lot of people can be using it without anyone knowing about it. Which is great. But these little success stories can make all the difference. It motivates me to keep pounding out the development hours, it encourages other people to try it, and it'd be a good thing to be able to point at if I tried to raise more funding now that I'm out of Kickstarter money.

I'm posting my own success story to my main blog: git annex and my mom

If you have a success story to share, why not blog about it, microblog it, or just post a comment here, or even send me a private message. Just a quick note is fine. Thanks!

Going through the bug reports and questions today, I ended up fixing three separate bugs that could break setting up a repo on a remote ssh server from the webapp.

Also developed a minimal test case for some gnucash behavior that prevents the watcher from seeing files it writes out. I understand the problem, but don't have a fix for that yet. Will have to think about it. (A year ago today, my blog featured the first release of the watcher.)

Pushed out a release today. While I've somewhat ramped down activity this month with the Kickstarter period over and summer trips and events ongoing, looking over the changelog I still see a ton of improvements in the 20 days since the last release.

Been doing some work to make the assistant daemon be more nice. I don't

want to nice the whole program, because that could make the web interface

unresponsive. What I am able to do, thanks to Linux violating POSIX, is to

nice certain expensive operations, including the startup scan and the daily

sanity check. Also, I put in a call to ionice (when it's available)

when git annex assistant --autostart is run, so the daemon's

disk IO will be prioritized below other IO. Hope this keeps it out of your

way while it does its job.

One of my Windows fixes yesterday got the test suite close to sort of working on Windows, and I spent all day today pounding on it. Fixed numerous bugs, and worked around some weird Windows behaviors -- like recursively deleting a directory sometimes fails with a permission denied error about a file in it, and leaves behind an empty directory. (What!?) The most important bug I fixed caused CR to leak into files in the git-annex branch from Windows, during a union merge, which was not a good thing at all.

At the end of the day, I only have 6 remaining failing test cases on

Windows. Half of them are some problem where running git annex sync

from the test suite stomps on PATH somehow and prevents xargs from working.

The rest are probably real bugs in the directory (again something to do

with recursive directory deletion, hmmm..), hook, and rsync

special remotes on Windows. I'm punting on those 6 for now, they'll be

skipped on Windows.

Should be worth today's pain to know in the future when I break something that I've oh-so-painfully gotten working on Windows.

Yay, I fixed the Handling of files inside and outside archive directory at the same time bug! At least in direct mode, which thanks to its associated files tracking knows when a given file has another file in the repository with the same content. Had not realized the behavior in direct mode was so bad, or the fix so relatively easy. Pity I can't do the same for indirect mode, but the problem is much less serious there.

That was this weekend. Today, I nearly put out a new release (been 2 weeks since the last one..), but ran out of time in the end, and need to get the OSX autobuilder fixed first, so have deferred it until Friday.

However, I did make some improvements today.

Added an annex.debug git config setting, so debugging can

be turned on persistently. People seem to expect that to happen when

checking the checkbox in the webapp, so now it does.

Fixed 3 or 4 bugs in the Windows port. Which actually, has users now, or at least one user. It's very handy to actually get real world testing of that port.

Today I got to deal with bugs on Android (busted use of cp among other

problems), Windows (fixed a strange hang when adding several files), and

Linux (.desktop files suck and Wine ships a particularly nasty one).

Pretty diverse!

Did quite a lot of research and thinking on XMPP encryption yesterday, but

have not run any code yet (except for trying out a D-H exchange in ghci).

I have listed several options on the XMPP page.

Planning to take a look at Handling of files inside and outside archive directory at the same time tomorrow; maybe I can come up with a workaround to avoid it behaving so badly in that case.

Got caught up on my backlog yesterday.

Part of adding files in direct mode involved removing write permission from them temporarily. That turned out to cause problems with some programs that open a file repeatedly, and was generally against the principle that direct mode files are always directly available. Happily, I was able to get rid of that without sacrificing any safety.

Improved syncing to bare repositories. Normally syncing pushes to a synced/master branch, which is good for non-bare repositories since git does not allow pushing to the currently checked out branch. But for bare repositories, this could leave them without a master branch, so cloning from them wouldn't work. A funny thing is that git does not really have any way to tell if a remote repository is bare or not. Anyway, I did put in a fix, at the expense of pushing twice (but the git data should only be transferred once anyway).

Slowly getting through the bugs that were opened while I was on vacation and then I'll try to get to all the comments. 60+ messages to go.

Got git-annex working better on encfs, which does not support hard links in paranoid mode. Now git-annex can be used in indirect mode, it doesn't force direct mode when hard links are not supported.

Made the Android repository setup special case generate a .gitignore file to ignore thumbnails. Which will only start working once the assistant gets .gitignore support.

Been thinking today about encrypting XMPP traffic, particularly git push data. Of course, XMPP is already encrypted, but that doesn't hide it from those entities who have access to the XMPP server or its encryption key. So adding client-to-client encryption has been on the TODO list all along.

OTR would be a great way to do it. But I worry that the confirmation steps OTR uses to authenticate the recipient would make the XMPP pairing UI harder to get through.

Particularly when pairing your own devices over XMPP, with several devices involved, you'd need to do a lot of cross-confirmations. It would be better there, I think, to just use a shared secret for authentication. (The need to enter such a secret on each of your devices before pairing them would also provide a way to use different repositories with the same XMPP account, so 2birds1stone.)

Maybe OTR confirmations would be ok when setting up sharing with a friend. If OTR was not used there, and it just did a Diffie-Hellman key exchange during the pairing process, it could be attacked by an active MITM spoofing attack. The attacker would then know the keys, and could decrypt future pushes. How likely is such an attack? This goes far beyond what we're hearing about. Might be best to put in some basic encryption now, so we don't have to worry about pushes being passively recorded on the server. Comments appreciated.

Today marks 1 year since I started working on the git-annex assistant. 280 solid days of work!

As a background task here at the beach I've been porting git-annex to yesod 1.2. Finished it today, earlier than expected, and also managed to keep it building with older versions. Some tricks kept the number of ifdefs reasonably low.

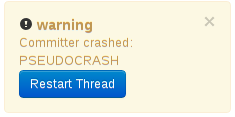

Landed two final changes before the release..

First, made git-annex detect if any of the several long-running git process

it talks to have died, and, if yes, restart them. My stress test is reliably

able to get at least git cat-file to crash, and while I don't know why (and

obviously should follow up by getting a core dump and stack trace of it),

the assistant needs to deal with this to be robust.

Secondly, wrote rather a lot of Java code to better open the web browser

when the Android app is started. A thread listens for URLs to be written to

a FIFO. Creating a FIFO from fortran^Wjava code is .. interesting. Glad to

see the back of the am command; it did me no favors.

AFK

Winding down work for now, as I prepare for a week at the beach starting in 2 days. That will be followed by a talk about git-annex at SELF2013 in Charlotte NC on June 9th.

Bits & pieces today.

Want to get a release out RSN, but I'm waiting for the previous release to finally reach Debian testing, which should happen on Saturday. Luckily I hear the beach house has wifi, so I will probably end up cutting the release from there. Only other thing I might work on next week is updating to yesod 1.2.

Yeah, Java hacking today. I have something that I think should deal with the ?Android app permission denial on startup problem. Added a "Open WebApp" item to the terminal's menu, which should behave as advertised. This is available in the Android daily build now, if your device has that problem.

I was not able to get the escape sequence hack to work. I had no difficulty modifying the terminal to send an intent to open an url when it received a custom escape sequence. But sending the intent just seemed to lock up the terminal for a minute without doing anything. No idea why. I had to propigate a context object in to the terminal emulator through several layers of objects. Perhaps that doesn't really work despite what I read on stackoverflow.

Anyway, that's all I have time to do. It would be nice if I, or some other interested developer who is more comfortable with Java, could write a custom Android frontend app, that embedded a web browser widget for the webapp, rather than abusing the terminal this way. OTOH, this way does provide the bonus of a pretty good terminal and git shell environment for Android to go with git-annex.

The fuzz testing found a file descriptor leak in the XMPP git push code. The assistant seems to hold up under fuzzing for quite a while now.

Have started trying to work around some versions of Android not letting

the am command be used by regular users to open a web browser on an URL.

Here is my current crazy plan: Hack the terminal emulator's title setting

code, to get a new escape sequence that requests an URL be opened. This

assumes I can just use startActivity() from inside the app and it will

work. This may sound a little weird, but it avoids me needing to set up a

new communications channel from the assistant to the Java app. Best of all,

I have to write very little Java code. I last wrote Java code in 1995, so

writing much more is probably a good thing to avoid.

Fuzz tester has found several interesting bugs that I've now fixed. It's even found a bug in my fixes. Most of the problems the fuzz testing has found have had to do with direct mode merges, and automatic merge conflict resoltion. Turns out the second level of automatic merge conflict resolution (where the changes made to resolve a merge conflict themselves turn out conflict in a later merge) was buggy, for example.

So, didn't really work a lot today -- was not intending to work at all actually -- but have still accomplished a lot.

(Also, Tobias contributed dropboxannex .. I'll be curious to see what the use case for that is, if any!)

Got caught up on some bug reports yesterday. The main one was odd behavior of the assistant when the repository was in manual mode. A recent change to the preferred content expression caused it. But the expression was not broken. The problem was in the parser, which got the parentheses wrong in this case. I had to mostly rewrite the parser, unfortunately. I've tested the new one fairly extensively -- on the other hand this bug lurked in the old parser for several years (this same code is used for matching files with command-line parameters).

Just as I finished with that, I noticed another bug. Turns out git-cat-file doesn't reload the index after it's started. So last week's changes to make git-annex check the state of files in the index won't work when using the assistant. Luckily there was an easy workaround for this.

Today I finished up some robustness fixes, and added to the test suite checks for preferred content expressions, manual mode, etc.

I've started a stress test, syncing 2 repositories over XMPP, with the fuzz tester running in each to create lots of changes to keep in sync.

The Android app should work on some more devices now, where hard linking to busybox didn't work. Now it installs itself using symlinks.

Pushed a point release so cabal install git-annex works again. And,

I'm really happy to see that the 4.20130521 release has autobuilt on all

Debian architectures, and will soon be replacing the old 3.20120629 version

in testing. (Well, once a libffi transition completes..)

TobiasTheMachine has done it again: ?googledriveannex

I spent most of today building a fuzz tester for the assistant. git annex

fuzztest will (once you find the special runes to allow it to run) create

random files in the repository, move them around, delete them, move

directory trees around, etc. The plan is to use this to run some long

duration tests with eg, XMPP, to make sure the assistant keeps things

in shape after a lot of activity. It logs in machine-readable format,

so if it turns up a bug I may even be able to use it to reproduce the same

bug (fingers crossed).

I was able to use QuickCheck to generate random data for some parts of the fuzz tester. (Though the actual file names it uses are not generated using QuickCheck.) Liked this part:

instance Arbitrary FuzzAction where

arbitrary = frequency

[ (100, FuzzAdd <$> arbitrary)

, (10, FuzzDelete <$> arbitrary)

, (10, FuzzMove <$> arbitrary <*> arbitrary)

, (10, FuzzModify <$> arbitrary)

, (10, FuzzDeleteDir <$> arbitrary)

, (10, FuzzMoveDir <$> arbitrary <*> arbitrary)

, (10, FuzzPause <$> arbitrary)

]

Tobias has been busy again today, creating a flickrannex special remote! Meanwhile, I'm thinking about providing a ?more complete interface so that special remote programs not written in Haskell can do some of the things the hook special remote's simplicity doesn't allow.

Finally realized last night that the main problem with the XMPP push code was an inversion of control. Reworked it so now there are two new threads, XMPPSendpack and XMPPReceivePack, each with their own queue of push initiation requests, that run the pushes. This is a lot easier to understand, probably less buggy, and lets it apply some smarts to squash duplicate actions and pick the best request to handle next.

Also made the XMPP client send pings to detect when it has been disconnected from the server. Currently every 120 seconds, though that may change. Testing showed that without this, it did not notice (for at least 20 minutes) when it lost routing to the server. Not sure why -- I'd think the TCP connections should break and this throw an error -- but this will also handle any idle disconnection problems that some XMPP servers might have.

While writing that, I found myself writing this jem using async, which has a comment much longer than the code, but basically we get 4 threads that are all linked, so when any dies, all do.

pinger `concurrently` sender `concurrently` receiver

Anyway, I need to run some long-running XMPP push tests to see if I've really ironed out all the bugs.

Got the bugfix release out.

Tobias contributed megaannex, which allows using mega.co.nz as a special remote. Someone should do this with Flickr, using filr. I have improved the hook special remote to make it easier to create and use reusable programs like megaannex.

But, I am too busy rewriting lots of the XMPP code to join in the special remote fun. Spent all last night staring at protocol traces and tests, and came to the conclusion that it's working well at the basic communication level, but there are a lot of bugs above that level. This mostly shows up as one side refusing to push changes made to its tree, although it will happily merge in changes sent from the other side.

The NetMessanger code, which handles routing messages to git commands and queuing other messages, seems to be just wrong. This is code I wrote in the fall, and have basically not touched since. And it shows. Spent 4 hours this morning rewriting it. Went all Erlang and implemented message inboxes using STM. I'm much more confident it won't drop messages on the floor, which the old code certainly did do sometimes.

Added a check to avoid unnecessary pushes over XMPP. Unfortunately, this required changing the protocol in a way that will make previous versions of git-annex refuse to accept any pushes advertised by this version. Could not find a way around that, but there were so many unnecessary pushes happening (and possibly contributing to other problems) that it seemed worth the upgrade pain.

Will be beating on XMPP a bit more. There is one problem I was seeing

last night that I cannot reproduce now. It may have been masked or even

fixed by these changes, but I need to verify that, or put in a workaround.

It seemed that sometimes this code in runPush would run the setup

and the action, but either the action blocked forever, or an exception

got through and caused the cleanup not to be run.

r <- E.bracket_ setup cleanup <~> a

Worked on several important bug fixes today. One affects automatic merge confict resolution, and can case data loss in direct mode, so I will be making a release with the fix tomorrow.

Practiced TDD today, and good thing too. The new improved test suite turned up a really subtle bug involving the git-annex branch vector clocks-ish code, which I also fixed.

Also, fixes to the OSX autobuilds. One of them had a broken gpg, which is now fixed. The other one is successfully building again. And, I'm switching the Linux autobuilds to build against Debian stable, since testing has a new version of libc now, which would make the autobuilds not work on older systems. Getting an amd64 chroot into shape is needing rather a lot of backporting of build dependencies, which I already did for i386.

Today I had to change the implementation of the Annex monad. The old one turned out to be buggy around exception handling -- changes to state recorded by code that ran in an exception handler were discarded when it threw an exception. Changed from a StateT monad to a ReaderT with a MVar. Really deep-level change, but it went off without a hitch!

Other than that it was a bug catch up day. Almost entirely caught up once more.

git-annex is now autobuilt for Windows on the same Jenkins farm that builds msysgit. Thanks for Yury V. Zaytsev for providing that! Spent about half of today setting up the build.

Got the test suite to pass in direct mode, and indeed in direct mode

on a FAT file system. Had to fix one corner case in direct mode git annex

add. Unfortunately it still doesn't work on Android; somehow git clone

of a local repository is broken there. Also got the test suite to build,

and run on Windows, though it fails pretty miserably.

Made a release.

I am firming up some ideas for post-kickstarter. More on that later.

In the process of setting up a Windows autobuilder, using the same jenkins installation that is used to autobuild msysgit.

Laid some groundwork for porting the test suite to Windows, and getting it

working in direct mode. That's not complete, but even starting to run the

test suite in direct mode and looking at all the failures (many of them

benign, like files not being symlinks) highlighted something

I have been meaning to look into for quite a while: Why, in direct mode,

git-annex doesn't operate on data staged in the index, but requires you

commit changes to files before it'll see them. That's an annoying

difference between direct and indirect modes.

It turned out that I introduced this behavior back on

January 5th, working around a nasty

bug I didn't understand. Bad Joey, should have root caused the bug at the

time! But the commit says I was stuck on it for hours, and it was

presenting as if it was a bug in git cat-file itself, so ok. Anyway,

I quickly got to the bottom of it today, fixed the underlying bug (which

was in git-annex, not git itself), and got rid of the workaround and its

undesired consequences. Much better.

The test suite is turning up some other minor problems with direct mode. Should have found time to port it earlier.

Also, may have fixed the issue that was preventing GTalk from working on Android. (Missing DNS library so it didn't do SRV lookups right.)

The Windows port can now do everything in the walkthrough. It can use both local and remote git repositories. Some special remotes work (directory at least; probably rsync; likely any other special remote that can have its dependencies built). Missing features include most special remotes, gpg encryption, and of course, the assistant.

Also built a NullSoft installer for git-annex today. This was made very easy when I found the Haskell ncis library, which provides a DSL embedding the language used to write NullSoft installers into Haskell. So I didn't need to learn a new language, yay! And could pull in all my helpful Haskell utility libraries in the program that builds the installer.

The only tricky part was: How to get git-annex onto PATH? The standard way to do this seems to be to use a multiple-hundred line include file. Of course, that file does not have any declared license.. Instead of that, I used a hack. The git installer for Windows adds itself to PATH, and is a pre-requisite for git-annex. So the git-annex installer just installs it into the same directory as git.

So.. I'll be including this first stage Windows port, with installer in the next release. Anyone want to run a Windows autobuilder?

Spent some time today to get caught up on bug reports and website traffic. Fixed a few things.

Did end up working on Windows for a while too. I got git annex drop

working. But nothing that moves content quite works yet..

I've run into a stumbling block with rsync. It thinks that

C:\repo is a path on a ssh server named "C". Seems I will need to translate

native windows paths to unix-style paths when running rsync.

It's remarkable that a bad decision made in 1982 can cause me to waste an

entire day in 2013. Yes, / vs \ fun time. Even though I long ago

converted git-annex to use the haskell </> operator wherever it builds

up paths (which transparently handles either type of separator), I still

spent most of today dealing with it. Including some libraries I use that

get it wrong. Adding to the fun is that git uses / internally, even on

Windows, so Windows separated paths have to be converted when being fed

into git.

Anyway, git annex add now works on Windows. So does git annex find,

and git annex whereis, and probably most query stuff.

Today was very un-fun and left me with a splitting headache, so I will certainly not be working on the Windows port tomorrow.

After working on it all day, git-annex now builds on Windows!

Even better, git annex init works. So does git annex status, and

probably more. Not git annex add yet, so I wasn't able to try much more.

I didn't have to add many stubs today, either. Many of the missing Windows features were only used in code paths that made git-annex faster, but I could fall back to a slower code path on Windows.

The things that are most problematic so far:

- POSIX file locking. This is used in git-annex in several places to make it safe when multiple git-annex processes are running. I put in really horrible dotfile type locking in the Windows code paths, but I don't trust it at all of course.

- There is, apparently, no way to set an environment variable in Windows from Haskell. It is only possible to set up a new process' environment before starting it. Luckily most of the really crucial environment variable stuff in git-annex is of this latter sort, but there were a few places I had to stub out code that tries to manipulate git-annex's own environment.

The windows branch has a diff of 2089 lines. It add 88 ifdefs to the code

base. Only 12 functions are stubbed out on Windows. This could be so much

worse.

Next step: Get the test suite to build. Currently ifdefed out because it

uses some stuff like setEnv and changeWorkingDirectory that I don't know

how to do in Windows yet.

Set up my Windows development environment. For future reference, I've installed:

- haskell platform for windows

- cygwin

- gcc and a full C toolchain in cygwin

- git from upstream (probably git-annex will use this)

- git in cygwin (the other git was not visible inside cygwin)

- vim in cygwin

- vim from upstream, as the cygwin vim is not very pleasant to use

- openssh in cygwin (seems to be missing a ssh server)

- rsync in cygwin

- Everything that

cabal install git-annexis able to install successfully.

This includes all the libraries needed to build regular git-annex, but not the webapp. Good start though.

Result basically feels like a linux system that can't decide which way slashes in paths go. :P I've never used Cygwin before (I last used a Windows machine in 2003 for that matter), and it's a fairly impressive hack.

Fixed up git-annex's configure program to run on Windows (or, at least, in Cygwin), and have started getting git-annex to build.

For now, I'm mostly stubbing out functions that use unix stuff. Gotten the first 44 of 300 source files to build this way.

Once I get it to build, if only with stubs, I'll have a good idea about all the things I need to find Windows equivilants of. Hopefully most of it will be provided by http://hackage.haskell.org/package/unix-compat-0.3.0.1.

So that's the plan. There is a possible shortcut, rather than doing a full port. It seems like it would probably not be too hard to rebuild ghc inside Cygwin, and the resulting ghc would probably have a full POSIX emulation layer going through cygwin. From ghc's documentation, it looks like that's how ghc used to be built at some point in the past, so it would probably not be too hard to build it that way. With such a cygwin ghc, git-annex would probably build with little or no changes. However, it would be a git-annex targeting Cygwin, and not really a native Windows port. So it'd see Cygwin's emulated POSIX filesystem paths, etc. That seems probably not ideal for most windows users.. but if I get really stuck I may go back and try this method.

It all came together for Android today. Went from a sort of working app to a fully working app!

- rsync.net works.

- Box.com appears to work -- at least it's failing with the same timeout I get on my linux box here behind the firewall of dialup doom.

- XMPP is working too!

These all needed various little fixes. Like loading TLS certificates from where they're stored on Android, and ensuring that totally crazy file permissions from Android (----rwxr-x for files?!) don't leak out into rsync repositories. Mostly though, it all just fell into place today. Wonderful..

The Android autobuild is updated with all of today's work, so try it out.

Fixed a nasty bug that affects at least some FreeBSD systems. It misparsed

the output of sha256, and thought every file had a SHA256 of "SHA256".

Added multiple layers of protection against checksum programs not having

the expected output format.

Lots more building and rebuilding today of Android libs than I wanted to do. Finally have a completly clean build, which might be able to open TCP connections. Will test tomorrow.

In the meantime, I fired up the evil twin of my development laptop. It's identical, except it runs Windows.

I installed the Haskell Platform for Windows on it, and removed some of the bloatware to free up disk space and memory for development. While a rather disgusting experience, I certainly have a usable Haskell development environment on this OS a lot faster than I did on Android! Cabal is happily installing some stuff, and other stuff wants me to install Cygwin.

So, the clock on my month of working on a Windows port starts now. Since I've already done rather a lot of ground work that was necessary for a Windows port (direct mode, crippled filesystem support), and for general sanity and to keep everything else from screeching to a halt, I plan to only spend half my time messing with Windows over the next 30 days.

Put in a fix for getprotobyname apparently not returning anything for

"tcp" on Android. This might fix all the special remotes there, but I don't

know yet, because I have to rebuild a lot of Haskell libraries to try it.

So, I spent most of today writing a script to build all the Haskell libraries for Android from scratch, with all my patches.

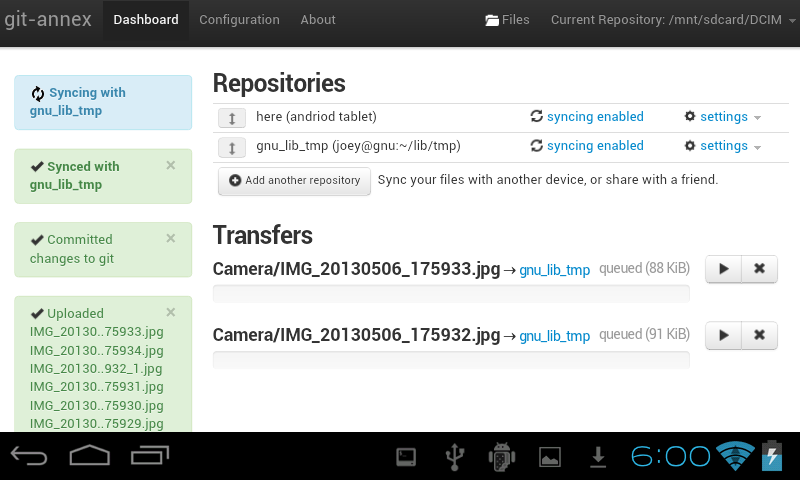

This seems a very auspicious day to have finally gotten the Android app doing something useful! I've fixed the last bugs with using it to set up a remote ssh server, which is all I need to make my Android tablet sync photos I take with a repository on my laptop.

I set this up entirely in the GUI, except for needing to switch to the terminal twice to enter my laptop's password.

How fast is it? Even several minute long videos transfer before I can

switch from the camera app to the webapp. To get this screenshot with it in

the process of syncing, I had to take a dozen pictures in a minute. Nice

problem to have.

Have fun trying this out for yourself after tonight's autobuilds. But a

warning: One of the bugs I fixed today had to be fixed in git-annex-shell,

as run on the ssh server that the Android connects to. So the Android app

will only work with ssh servers running a new enough version of git-annex.

Worked on geting git-annex into Debian testing, which is needed before the wheezy backport can go in. Think I've worked around most of the issues that were keeping it from building on various architectures.

Caught up on some bug reports and fixed some of them.

Created a backport of the latest git-annex release for Debian 7.0 wheezy. Needed to backport a dozen haskell dependencies, but not too bad. This will be available in the backports repository once Debian starts accepting new packages again. I plan to keep the backport up-to-date as I make new releases.

The cheap Android tablet I bought to do this last Android push with came pre-rooted from the factory. This may be why I have not seen this bug: ?Android app permission denial on startup. If you have Android 4.2.2 or a similar version, your testing would be helpful for me to know if this is a widespread problem. I have an idea about a way to work around the problem, but it involves writing Java code, and probably polling a file, ugh.

Got S3 support to build for Android. Probably fails to work due to the same network stack problems affecting WebDAV and Jabber.

Got removable media mount detection working on Android. Bionic has an

amusing stub for getmntent that prints out "FIX ME! implement

getmntent()". But, /proc/mounts is there, so I just parse it.

Also enabled the app's WRITE_MEDIA_STORAGE permission to allow

access to removable media. However, this didn't seem to do anything.

Several fixes to make the Android webapp be able to set up repositories on

remote ssh servers. However, it fails at the last hurdle with what

looks like a git-annex-shell communication problem. Almost there..

There's a new page Android that documents using git-annex on Android in detail.

The Android app now opens the webapp when a terminal window is opened. This is good enough for trying it out easily, but far from ideal.

Fixed an EvilSplicer bug that corrupted newlines in the static files served by the webapp. Now the icons in the webapp display properly, and the javascript works.

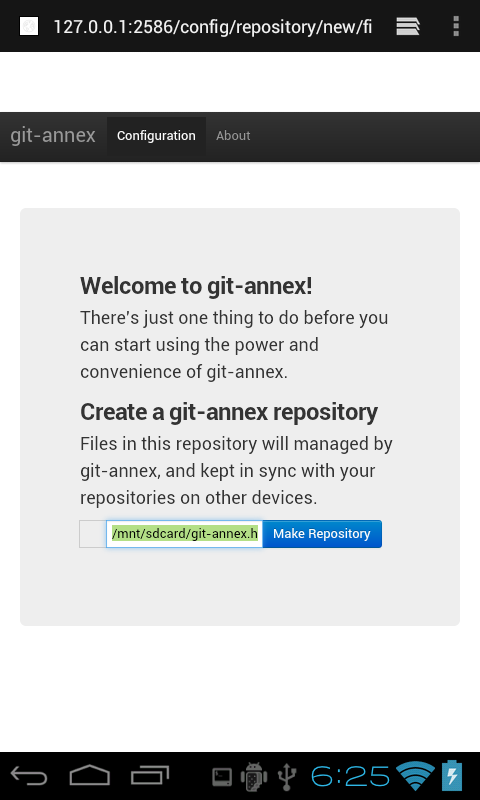

Made the startup screen default to /sdcard/annex for the repository

location, and also have a button to set up a camera repository. The camera

repository is put in the "source" preferred content group, so it will only

hang onto photos and videos until they're uploaded off the Android device.

Quite a lot of other small fixes on Android. At this point I've tested the following works:

- Starting webapp.

- Making a repository, adding files.

- All the basic webapp UI.

However, I was not able to add any remote repository using only the webapp, due to some more problems with the network stack.

- Jabber and Webdav don't quite work ("getProtocolByname: does not exist (no such protocol name: tcp)").

- SSH server fails. ("Network/Socket/Types.hsc:(881,3)-(897,61): Non-exhaustive patterns in case") I suspect it will work if I disable the DNS expansion code.

So, that's the next thing that needs to be tackled.

If you'd like to play with it in its current state, I've updated the Android builds to incorporate all my work so far.

I fixed what I thought was keeping the webapp from working on Android, but

then it started segfaulting every time it was started. Eventually I

determined this segfault happened whenever haskell code called

getaddrinfo. I don't know why. This is particularly weird since I had

a demo web server that used getaddrinfo working way back in

day 201 real Android wrapup. Anyway, I worked around it by not using

getaddrinfo on Android.

Then I spent 3 hours stuck, because the webapp seemed to run, but

nothing could connect to the port it was on. Was it a firewall? Was

the Haskell threaded runtime's use of accept() broken? I went all the way

down to the raw system calls, and back, only to finally notice I had netstat

available on my Android. Which showed it was not listening to the port I

thought it was!

Seems that ntohs and htons are broken somehow. To get the

screenshot, I fixed up the port manually. Have a build running that

should work around the issue.

Anyway, the webapp works on Android!

Pushed out a release today. Looking back over April, I'm happy with it as a bug fix and stabilization month. Wish I'd finished the Android app in April, but let's see what happens tomorrow.

Recorded part of a screencast on using Archive.org, but recordmydesktop

lost the second part. Grr. Will work on that later.

Took 2 days in a row off, because I noticed I have forgotten to do that since February, or possibly earlier, not counting trips. Whoops!

Also, I was feeling overwhelmed with the complexity of fixing XMPP to not be buggy when there are multiple separate repos using the same XMPP account. Let my subconscious work on that, and last night it served up the solution, in detail. Built it today.

It's only a partial solution, really. If you want to use the same XMPP account for multiple separate repositories, you cannot use the "Share with your other devices" option to pair your devices. That's because XMPP pairing assumes all your devices are using the same XMPP account, in order to avoid needing to confirm on every device each time you add a new device. The UI is clear about that, and it avoids complexity, so I'm ok with that.

But, if you want to instead use "Share with a friend", you now can use the same XMPP account for as many separate repositories as you like. The assistant now ignores pushes from repositories it doesn't know about. Before, it would merge them all together without warning.

While I was testing that, I think I found out the real reason why XMPP

pushes have seemed a little unreliable. It turns out to not be an XMPP

issue at all! Instead, the merger was simply not always

noticing when git receive-pack updated a ref, and not merging it into

master. That was easily fixed.

Adam Spiers has been getting a .gitignore query interface suitable for

the assistant to use into git, and he tells me it's landed in next.

I should soon check that out and get the assistant using it. But first,

Android app!

Turns out my old Droid has such an old version of Android (2.2) that it doesn't work with any binaries produced by my haskell cross-compiler. I think it's using a symbol not in its version of libc. Since upgrading this particular phone is a ugly process and the hardware is dying anyway (bad USB power connecter), I have given up on using it, and ordered an Android tablet instead to use for testing. Until that arrives, no Android. Bah. Wanted to get the Android app working in April.

Instead, today I worked on making the webapp require less redundant password entry when adding multiple repositories using the same cloud provider. This is especially needed for the Internet Archive, since users will often want to have quite a few repositories, for different IA items. Implemented it for box.com, and Amazon too.

Francois Marier has built an Ubuntu PPA for git-annex, containing the current version, with the assistant and webapp. It's targeted at Precise, but I think will probably also work with newer releases. https://launchpad.net/~fmarier/+archive/ppa

Probably while I'm waiting to work on Android again, I will try to improve the situation with using a single XMPP account for multiple repositories. Spent a while today thinking through ways to improve the design, and have some ideas.

Quiet day. Only did minor things, like adding webapp UI for changing the

directory used by Internet Archive remotes, and splitting out an

enableremote command from initremote.

My Android development environment is set up and ready to go on my Motorola Droid. The current Android build of git-annex fails to link at run time, so my work is cut out for me. Probably broke something while enabling XMPP?

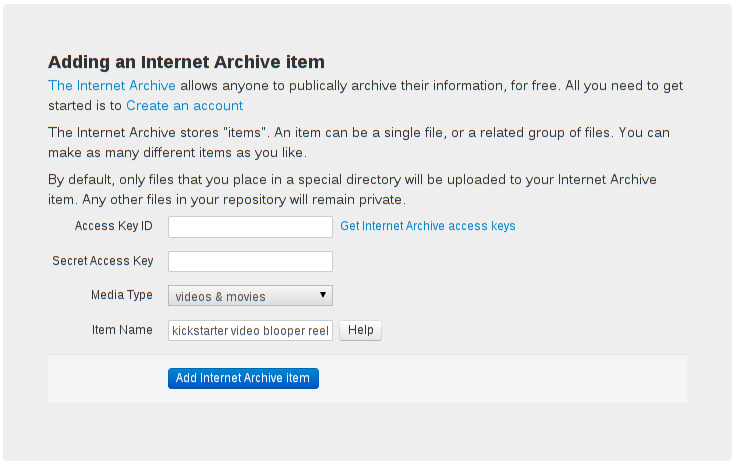

Very productive & long day today, spent adding a new feature to the webapp: Internet Archive support!

git-annex already supported using archive.org via its S3 special remotes, so this is just a nice UI around that.

How does it decide which files to publish on archive.org? Well, the item has a unique name, which is based on the description field. Any files located in a directory with that name will be uploaded to that item. (This is done via a new preferred content expression I added.)

So, you can have one repository with multiple IA items attached, and sort files between them however you like. I plan to make a screencast eventually demoing that.

Another interesting use case, once the Android webapp is done, would be add a repository on the DCIM directory, set the archive.org repository to prefer all content, and bam, you have a phone or tablet that auto-publishes and archives every picture it takes.

Another nice little feature added today is that whenever a file is uploaded

to the Internet Archive, its public url is automatically recorded, same

as if you'd ran git annex addurl. So any users who can clone your

repository can download the files from archive.org, without needing any

login or password info. This makes the Internet Archive a nice way to

publish the large files associated with a public git repository.

Working on assistant's performance when it has to add a whole lot of files (10k to 100k).

Improved behavior in several ways, including fixing display of the alert in the webapp when the default inotify limit of 8192 directories is exceeded.

Created a new TList data type, a transactional DList. Much nicer implementation than the TChan based thing it was using to keep track of the changes, although it only improved runtime and memory usage a little bit. The way that this is internally storing a function in STM and modifying that function to add items to the list is way cool.

Other tuning seems to have decreased the time it would take to import 100k files from somewhere in the range of a full day (too long to wait to see), to around 3.5 hours. I don't know if that's good, but it's certainly better.

There seem to be a steady state of enough bug reports coming in that I can work on them whenever I'm not working on anything else. As I did all day today.

This doesn't bother me if the bug reports are of real bugs that I can reproduce and fix, but I'm spending a lot of time currently following up to messages and asking simple questions like "what version number" and "can I please see the whole log file". And just trying to guess what a vague problem report means and read people's minds to get to a definite bug with a test case that I can then fix.

I've noticed the overall quality of bug reports nosedive over the past several months. My guess is this means that git-annex has found a less technical audience. I need to find something to do about this.

With that whining out of the way ... I fixed a pretty ugly bug on FAT/Android today, and I am 100% caught up on messages right now!

Got the OSX autobuilder back running, and finally got a OSX build up for the 4.20130417 release. Also fixed the OSX app build machinery to handle rpath.

Made the assistant (and git annex sync) sync with git remotes that have

annex-ignore set. So, annex-ignore is only used to prevent using

the annex of a remote, not syncing with it. The benefit of this change

is that it'll make the assistant sync the local git repository with

a git remote that is on a server that does not have git-annex installed.

It can even sync to github.

Worked around more breakage on misconfigured systems that don't have GECOS information.

... And other bug fixes and bug triage.

Ported all the C libraries needed for XMPP to Android. (gnutls, libgcrypt, libgpg-error, nettle, xml2, gsasl, etc). Finally got it all to link. What a pain.

Bonus: Local pairing support builds for Android now, seems recent changes to the network library for WebDAV also fixed it.

Today was not a work day for me, but I did get a chance to install git-annex in real life while visiting. Was happy to download the standalone Linux tarball and see that it could be unpacked, and git-annex webapp started just by clicking around in the GUI. And in very short order got it set up.

I was especially pleased to see my laptop noticed this new repository had appeared on the network via XMPP push, and started immediately uploading files to my rsync.net transfer repository so the new repository could get them.

Did notice that the standalone tarball neglected to install a FDO menu file. Fixed that, and some other minor issues I noticed.

I also got a brief chance to try the Android webapp. It fails to start;

apparently getaddrinfo doesn't like the flags passed to it and is

failing. As failure modes go, this isn't at all bad. I can certainly work

around it with some hardcoded port numbers, but I want to fix it the right

way. Have ordered a replacement battery for my dead phone so I can use it

for Android testing.

Got WebDAV enabled in the Android build. Had to deal with some system calls not available in Android's libc.

New poll: Android default directory

Finished the last EvilSplicer tweak and other fixes to make the Android webapp build without any hand-holding.

Currently setting up the Android autobuilder to include the webapp in its builds. To make this work I had to set up a new chroot with all the right stuff installed.

Investigated how to make the Android webapp open a web browser when run.

As far as I can tell (without access to an Android device right now),

am start -a android.intent.action.VIEW -d http://localhost/etc should do

it.

Seems that git 1.8.2 broke the assistant. I've put in a fix but have not yet tested it.

Late last night, I successfully built the full webapp for Android!

That was with several manual modifications to the generated code, which I still need to automate. And I need to set up the autobuilder properly still. And I need to find a way to make the webapp open Android's web browser to URL. So it'll be a while yet until a package is available to try. But what a milestone!

The point I was stuck on all day yesterday was generated code that looked like this:

(toHtml

(\ u_a2ehE -> urender_a2ehD u_a2ehE []

(CloseAlert aid)))));

That just couldn't type check at all. Most puzzling. My best guess is that

u_a2ehE is the dictionary GHC passes internally to make a typeclass work,

which somehow leaked out and became visible. Although

I can't rule out that I may have messed something up in my build environment.

The EvilSplicer has a hack in it that finds such code and converts it to

something like this:

(toHtml

(flip urender_a2ehD []

(CloseAlert aid)))));

I wrote some more about the process of the Android port in my personal blog: Template Haskell on impossible architectures

Release day today. The OSX builds are both not available yet for this release, hopefully will come out soon.

Several bug fixes today, and got mostly caught up on recent messages. Still have a backlog of two known bugs that I cannot reproduce well enough to have worked on, but I am thinking I will make a release tomorrow. There have been a lot of changes in the 10 days since the last release.

I am, frustratingly, stuck building the webapp on Android with no forward progress today (and last night) after such a productive day yesterday.